Why Highly Regulated Industries Need a Smarter AI

If you work in a highly regulated industry, the pressure to adopt AI comes with a different set of risks. A wrong answer could mean a compliance violation, legal exposure, or even a safety issue.

Most generative and RAG-based AI wasn’t built for that. Many of them are trained on public data, not your policies, so they can’t explain their reasoning or know when they’re out of their depth. These gaps in understanding make these systems unsafe in high-stakes environments.

You need AI that operates within strict guardrails, follows your rules, uses only approved sources, and knows when to escalate. That’s the foundation of agentic AI, which doesn’t just generate responses. It understands your workflows, respects your obligations, and leaves a full audit trail behind.

4 Reasons Traditional AI Fails When It Has to Hold Up to Strict Regulations

AI failures don’t always look dramatic in the moment, but in regulated industries, even small gaps can carry real consequences. When you look closely at how traditional generative and RAG-based systems operate, the risks become clear.

They can pull from the wrong information source, drop key context, miss escalation triggers, and leave no record of how a decision was made. Those technical limitations become compliance risks.

1. Traditional AI Often Gives Answers from the Wrong Source

Many customer service tools that claim to be AI are built on top of models like GPT, Claude, or Gemini. These models generate responses based on patterns in the data they were trained on—often including public content that may not align with your policies. RAG systems add a retrieval layer, but unless they’re tightly configured, they can still pull from irrelevant or outdated articles, summarize content inaccurately, or ignore policy boundaries entirely.

For example, imagine a patient who’s messaged their healthcare provider’s chatbot to ask if a medication is safe to take with a pre-existing condition. The AI pulls from general medical content or public FAQs and says yes, but the provider’s internal guidance lists that condition as a contraindication.

The AI wasn’t trained on their internal guidance, so it gives incorrect advice. The patient takes the medication, has a reaction, and now the provider faces legal exposure without an audit trail or way to verify how the decision was made.

2. Traditional AI Can Lose the Thread Mid-Resolution

Many generative or RAG-based models were designed for single-turn interactions, not for completing multi-step processes. They process each prompt in isolation or with shallow memory, which means they don’t retain critical details as a conversation progresses. They can’t enforce workflows, keep a clear understanding of where a customer is in the process, or remember what’s already been verified unless that context is manually re-fed at every step.

For example, a customer might contact their investment platform’s AI chatbot to update the beneficiary on a retirement account. The AI collects identity verification, confirms the account, and starts the workflow. But partway through, it forgets the verified identity status and asks the customer to authenticate again. Worse, it processes the update on the wrong account type—say, a taxable brokerage account instead of the retirement account the customer originally referenced.

Now the platform has mishandled a legally binding request. There’s no clear record of what went wrong, and no way to trace or defend how the decision was made.

3. Traditional AI Often Doesn't Know When to Escalate

Most traditional AI systems aren’t built to recognize when they’re out of their depth. Generative models are optimized to keep answering, not to assess risk. They’ll generate a confident-sounding response even when the input is ambiguous, sensitive, or outside the scope of what they should handle.

These systems don’t include native confidence thresholds or escalation triggers unless you build them manually. They treat a password reset the same way they treat a billing dispute or a legal claim because they don’t understand the difference.

RAG-based tools have similar gaps. They retrieve relevant content, but they don’t evaluate whether that content actually resolves the question or whether the question itself requires human review.

For example, a customer who contacts their insurer’s AI chatbot to report storm damage might mention that the home is uninhabitable and that there’s water damage to the structure. The AI, trained on general property claim FAQs, assumes it’s a standard case and walks the customer through filing. But the policy includes special handling for total loss and emergency housing which the AI never triggers.

Now the customer is delayed in getting temporary housing, and the insurer is exposed to financial and reputational risk. There’s no flag, escalation, or record that the issue was ever treated as urgent.

4. Traditional AI Doesn't Leave an Auditable Trail

Traditional AI systems don’t create a log of sources, what rules were applied, or why a specific answer was given. That becomes a problem when regulators, auditors, or legal teams come asking.

On the one hand, generative models generate outputs based on language patterns, not a defined process, so there’s no way to reconstruct what led to a specific response. On the other hand, RAG systems retrieve information from sources like help articles or policy docs, but they don’t track how that information was used or whether it was used correctly.

That means there’s no consistent mapping from input to output. The same prompt might yield different responses based on subtle context changes or model drift, and there’s no documentation showing which policy was followed or why.

Say a customer contacts a mobile carrier to dispute an international roaming charge. The AI apologizes and removes the fee, but during an internal audit weeks later, the finance and legal teams can’t determine why the charge was waived. There’s no record of the customer’s eligibility, the source of the policy, or the rationale behind the decision. Now the company has violated its own billing policies, set a precedent it can’t explain, and has no way to defend the decision if challenged.

Agentic AI Has the Power to Act Autonomously and Responsibly

Agentic AI works in regulated environments because it reflects the same controls, checks, and traceability that your compliance process already requires. Where traditional AI just generates answers, agentic AI executes policies based on your data, your workflows, and your escalation rules.

Agentic AI Reliably Pulls Information from the Right Source

Customer support tools built on agentic AI are designed to take action, not just generate responses. That means they must be connected to internal systems, like your help desk, CRM, knowledge base, or billing platform. These are the systems where actions happen and where your real data lives. Without that connection, the AI can’t follow policy, complete a task, or resolve an issue accurately.

Unlike traditional AI, agentic systems don’t guess. If they’re issuing a refund or updating a record, they pull the correct information from the source of truth and follow the exact steps your policy requires.

Take the earlier healthcare example where a patient messaged their provider’s chatbot to ask if a medication is safe to take with a pre-existing heart condition. A chatbot powered by agentic AI would check the patient’s record, confirm the condition, and reference internal clinical guidance. It would recognize the risk and could reply:

“Thanks for reaching out. Based on your medical history, this medication may not be safe. I’m escalating this to a licensed clinician to review and follow up directly.”

The AI flags the case, logs the full interaction, and routes it into the appropriate clinical workflow with a complete audit trail of how the decision was made.

Agentic AI Maintains Context Across Workflows

In order to complete tasks, agentic AI requires a different kind of architecture. In order to maintain a persistent understanding of where the customer is in the process, it’s configured to retain context across multiple steps, apply conditional logic, trigger escalations when needed, and call external systems mid-process to complete an action in real time.

Consider our earlier investment scenario. That customer contacted their investment platform to update the beneficiary on a retirement account. An agentic AI system would verify the customer once, log that authentication, and retain it throughout the entire workflow. It would correctly identify the account type and apply the change using the right policy.

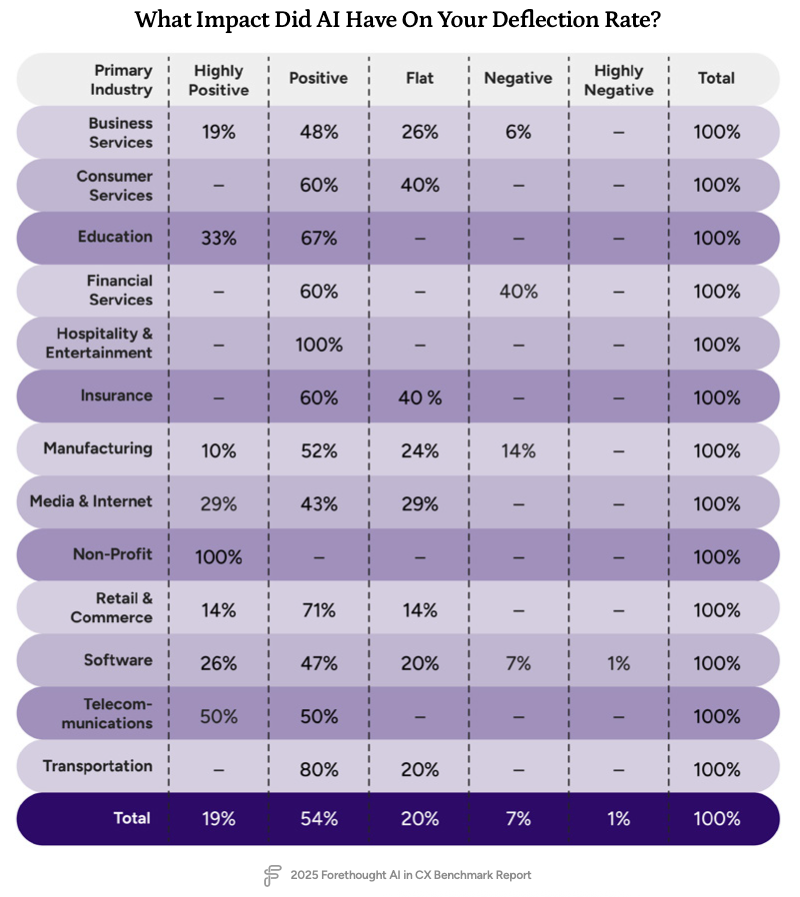

When that kind of system is in place, metrics like deflection rate improve because AI is actually resolving the issue, not just responding to it. In fact, 60% of financial services organizations say AI had a positive impact on their deflection rate, according to our 2025 AI in CX Benchmark Report:

As the chart shows, financial services isn’t alone. Across industries like education, retail, and telecom, the majority of teams using AI reported a positive or highly positive impact on deflection.

At Forethought, this execution framework is built into Autoflows, our agentic AI technology. Autoflows are structured workflows that preserve context from verified inputs to dynamic decision branches.

When Forethought’s chat and email agents receive a query, they track where the customer is in the flow, what's already been captured, and which rules apply next. That allows it to handle ID verification, policy lookups, multi-step forms, and branching logic without losing the thread. Because it’s integrated with your systems, the context it carries forward is real.

Agentic AI Knows When to Escalate with Human-in-the-Loop Triggers

Agentic AI is built to know its limits. When a request falls outside of predefined conditions—whether due to complexity, ambiguity, or risk—it can escalate the case automatically with full context intact. That’s possible because these systems are configured with specific guardrails: confidence thresholds, escalation logic, fallback rules, and human-in-the-loop triggers that keep people involved when necessary.

Revisit the earlier insurance example. A customer contacts their insurer’s chatbot to report storm damage. They say the home is uninhabitable and that there’s structural flooding. A traditional AI might treat this as a standard claim and proceed without escalation. But an agentic system would recognize the severity based on policy logic or language signals and route the case to a human agent with all relevant details already captured.

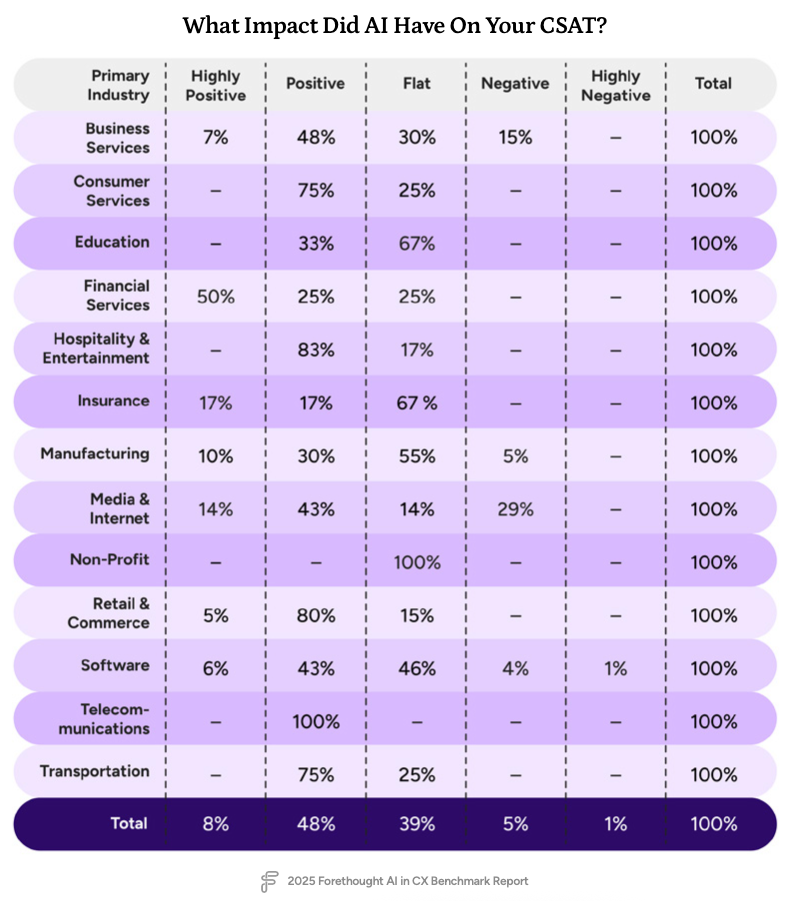

That’s the difference between guessing and knowing when to stop. And when escalation happens in the right moments, customer satisfaction improves. In insurance, for example, 34% of companies reported a positive or highly positive impact on CSAT when using AI:

The results reflect a broader trend: when agentic systems are set up to involve humans where it counts, the experience gets better for everyone involved.

These escalation triggers are also built into Autoflows. If the system isn’t confident in the next step, you can configure it to stop or escalate based on workflow-level thresholds. It can route based on complexity, sentiment, system status, or even customer value. When it does escalate, the full ticket history, verified inputs, and AI reasoning are transferred with it. Escalation rules are governed by your policies, so high-risk or high-impact cases get to the right person at the right time.

Agentic AI Automatically Creates an Audit Trail

While traditional AI systems can’t explain how or why a decision was made, agentic AI operates differently. Because it follows a defined workflow instead of generating one-off responses, every step can be tracked and recorded. Agentic systems log what the user asked, which internal system or source was used, what action the AI took, which workflow was followed, and whether escalation occurred.

That level of traceability is possible because the architecture is built for it using structured execution logic, deterministic decision paths, and direct integrations with the tools and data that power your operations.

Consider the earlier telecom example. A customer had contacted their mobile carrier to dispute an international roaming charge. An agentic system would check the customer’s plan, reference the correct billing policy, confirm refund eligibility, and issue the credit. Behind the scenes, the system logs:

- The customer’s request

- The Autoflow used

- The steps triggered based on plan type

- The systems accessed (like the billing database)

- The confidence threshold that was crossed

- The timestamp, outcome, and any escalations

If an auditor reviews the case later, there’s no ambiguity. The entire decision path is documented and defensible. Within autoflows, each execution is logged with input, output, source, and reasoning steps.

Forethought's voice, chat and email agents capture information like which macros, CRM fields, or help articles were used, while logs include timestamps, triggered rules, workflow IDs, and confidence scores.

Trust Isn't Optional for Regulated Industries

In high-stakes industries, it’s not enough for AI to get the answer right. It has to follow your process, which includes applying the correct policy, retaining the right context, escalating when needed, and creating a complete record of what happened and why.

Forethought’s omnichannel agentic AI solutions make that possible by acting like part of your business. Our solutions respect your rules, your workflows, and your risk thresholds.

To see what it looks like when AI doesn’t cut corners, request a Forethought demo today.

Hashtags blocks for sticky navbar (visible only for admin)

{{resource-cta}}

{{resource-cta-horizontal}}

{{authors-one-in-row}}

{{authors-two-in-row}}

{{download-the-report}}

{{cs-card}}